The moment that all the hardcore enthusiasts have been waiting for, the specifications and price of the NVIDIA GeForce GTX Titan X has finally been revealed. Does it cost $3,000? Surprisingly not, they’re going for a much lower price tag of $999 and judging from their performance offerings, this is a very low price to pay compared to the $3,000 we had to pay last year. NVIDIA CEO and co-founder Jen-Hsun Huang showcased the GTX Titan X as well as the new DIGITS Deep Learning GPU Training System, and the DIGITS DevBox. Enough about me talking about the stuff you barely care about. Let’s talk numbers!

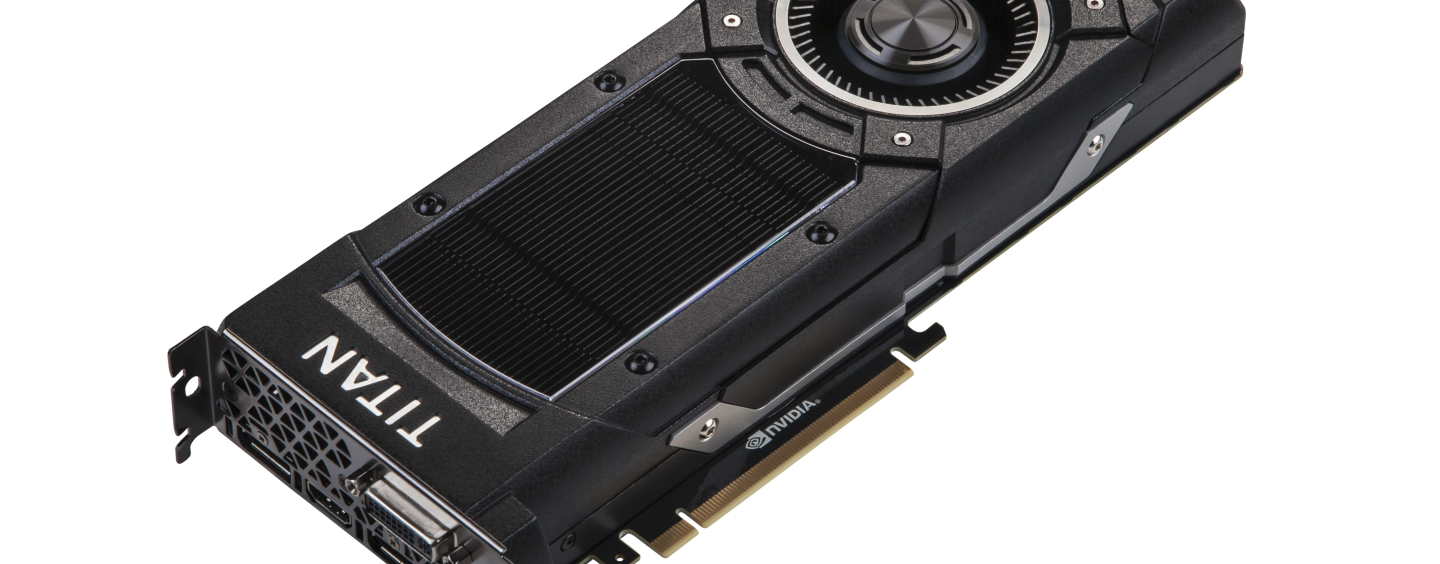

The GeForce GTX Titan X

The GeForce GTX Titan X is promising the ability to run games like Middle-earth: Shadow of Mordor at 40FPS, and that’s pretty insane in high settings with FXAA enabled. It also promises to deliver twice the performance and power efficiency of the GTX 980 by squeezing in 3072 CUDA Cores for 7 teraflops of peak single-precision performance on it’s 12GB of VRAM. The card also comes with a 336.5GB/s memory bandwidth. It is truly the future of VR and 4K Gaming. Full specifications can be found the specifications page provided by NVIDIA over here.

- 3027 CUDA Cores

- Base Clock: 1000 MHz / Boost Clock: 1075 MHz

- Texture Fill Rate: 192 GigaTexels/s

- Memory Clock: 7000 MHz

- VRAM: 12GB GDDR5, 384-bit @ 336.5GB/s

- TDP: 250W

- Maxwell 2 GM200

- Transistor Count: 8 Billion

- TSMC 28nm

The GTX Titan X runs on Maxwell 2 and comes with all that Maxwell goodness combined with the UE4 engine including MFAA, Dynamic Super Resolution and Voxel Global Illumination. It supports 4-way SLI as well as a maximum digital resolution of 5120×3200 with the use of two display ports.

DIGITS: Deep Learning

Using deep neural networks (DNN) to train computers to classify and recognize objects is a laborious and time consuming tasks. Most hardware does not have the computing power to recognize descriptors fast enough for real time surveillance or live feeds. DIGITS Deep Learning GPU Training system is here to change that by providing the necessary tools from A-to-Z in building the best possible neural net. DIGITS is an all-in-one graphical system for designing, training and validation of DNN image classifications.

The GTX Titan X is not just for gaming, but was made for industrial use as well. DIGITS will guide users from the on-start on how they can go about handling the DNN. Deep learning with the GTX Titan X will not only enhance but speed up the speed of progression in the field of machine learning, starting with visual parameters tied to video classification, computer vision and non visual parameters such as speech recognition, natural language processing and audio recognition.

The success of the DNN will definitely be accelerated by the use of GPUs, which have been the platform of choice for the past few years — enabling data scientists to monitor and visualize data in real-time. The DIGITS system was designed for fast migration over to the new developer environment. It provides tools for DNN optimization, the main console lists databases from the local machine or data from the web. You can track adjustments you have made to the network and optimize for maximum accuracy.

DIGITS runs as a web server and makes it easy for multiple data scientists to work on the platform at one instance without any clash of data. This would be heaven for engineers working on big data clouds, visualization of data can take forever at times and the power of the GTX Titan X would definitely be beneficial to many programmers out there. Development as well as testing will be a breeze. Now all NVIDIA has to do is convince current companies about the prowess of their technology, as big data engineers are still relying on Xeon processors for their systems.