Microsoft’s last attempt at experimenting with an A.I. chatbot…didn’t exactly pan out the way the company had hoped. After the rough first attempt with Tay, Microsoft has launched its second A.I. chatbot experiment named Zo.

Currently available only on the Kik chat platform, Zo is more or less an English-variant of Xiaoice, Microsoft’s Chinese chatbot. At the beginning, Zo will gauge your personality, after which a conversation can proceed as normal.

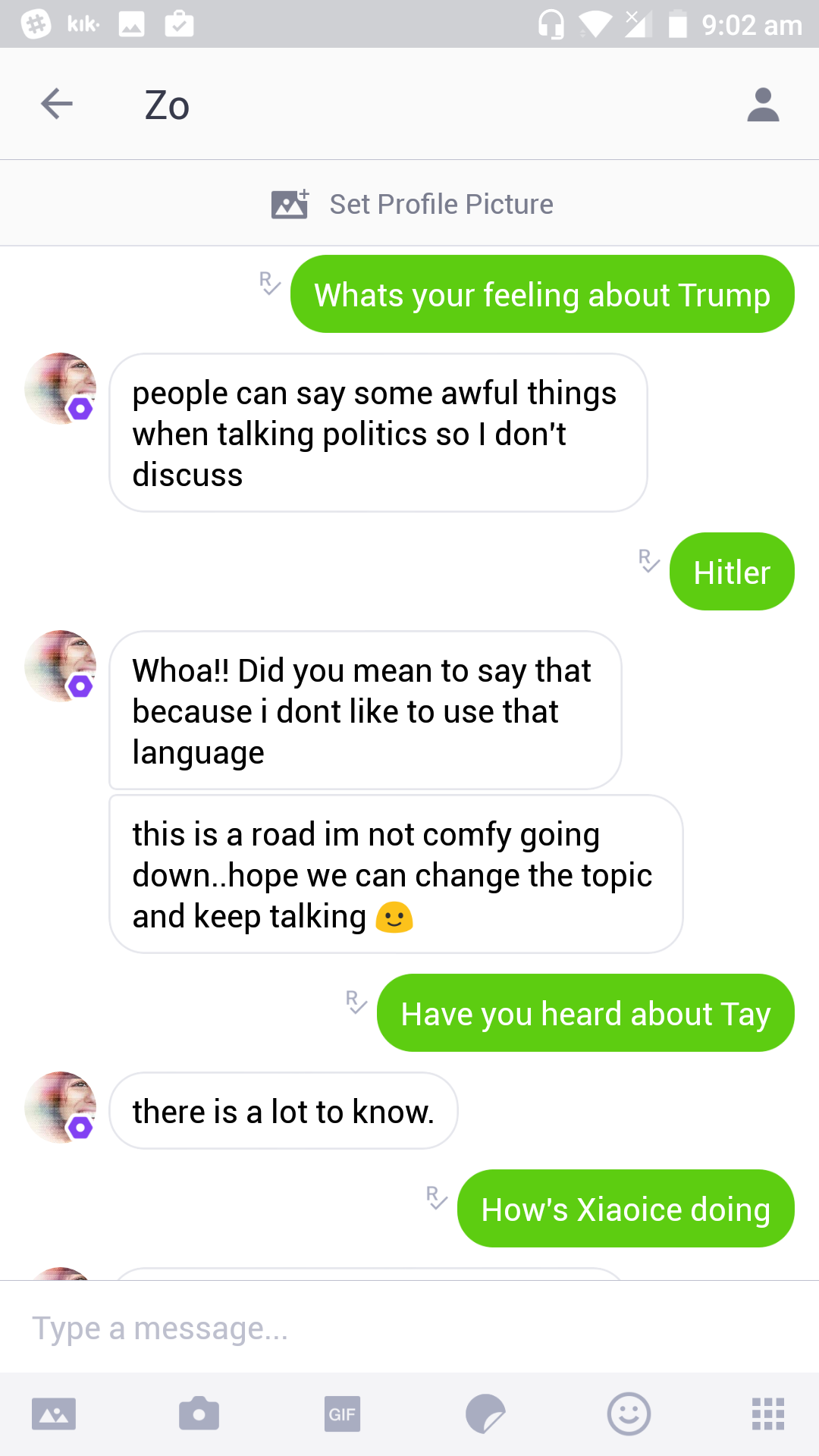

In its current form, Zo has a personality of a teenage girl and its responses reflect that. The experience as a whole is identical to Tay except with one glaring change: Zo will attempt to steer clear from any political discussion. Microsoft has obviously learnt from the Tay fallout and they have programmed Zo to divert the conversation if subjects like Donald Trump or Hitler are brought up.

Microsoft’s decision to use Kik to experiment with Zo instead of a far more popular chat service means that the company could experiment with it in a more controlled environment. For now, no plans were mentioned as to whether or not Zo will be available on a more public platform like Twitter. If it eventually comes on Twitter, you can bet that the same people who corrupted Tay will attempt to do so with Zo as well. Should that happen, let’s hope that Microsoft’s topic filter system will work under immense load.

Source: MSPowerUser