Dealing with internet trolls using moderators isn’t exactly an easy job mainly because the sheer volume of trolls online could swamp the amount of moderators that works in any one company. In an attempt to save on manpower, Jigsaw, a company of Alphabet, has announced that it will begin using machine learning to combat online harassment.

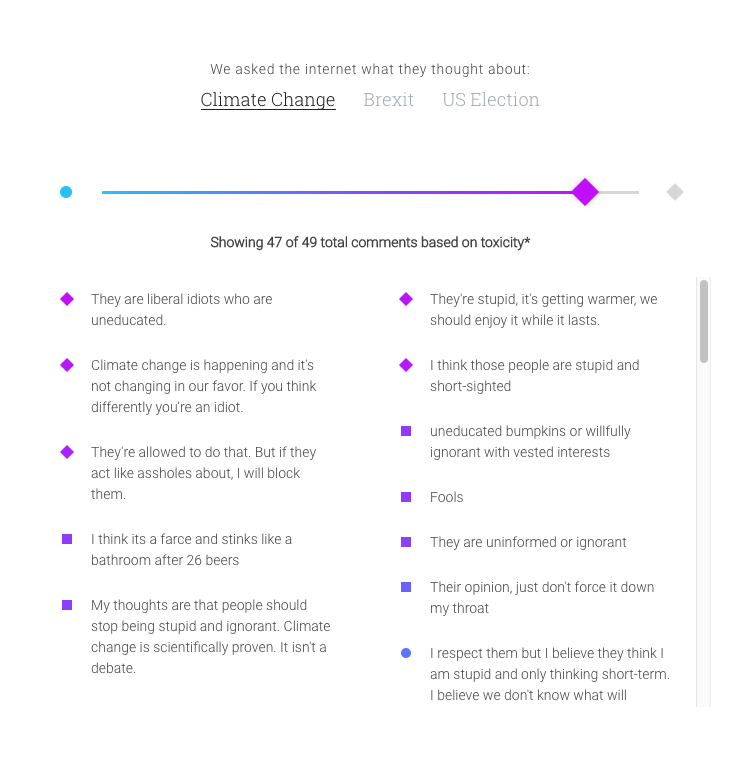

The system in question is named “Perspective”, and Jigsaw is looking to train it to identify “toxic” comments. To do so, Jigsaw is feeding Perspective with thousands of comments that are identified as toxic by regular people. From there, the process is pretty straightforward in terms of machine learning: Perspective would be able to detect similarties from one comment to another, which allows it to flag toxic comments more accurately as time goes on.

Right now, Jigsaw has partnered up with The New York Times in order to test out the system in its comments section. Jigsaw will also be providing the API for Perspective to publishers and platforms who wish to use this technology for their websites.

Source: Google Blog